xAPI Dashboard Exploration

When AI-driven analytics reveal unexpected insights

Project Context: An exploration in leveraging Claude AI to analyze xAPI data from my Customer Service Recovery portfolio project, revealing how context shapes data interpretation and optimization opportunities.

My role: Learning Experience Designer, Learning Analytics Explorer

Tools used: Veracity LRS, Claude (Sonnet 4.5), CSV Export

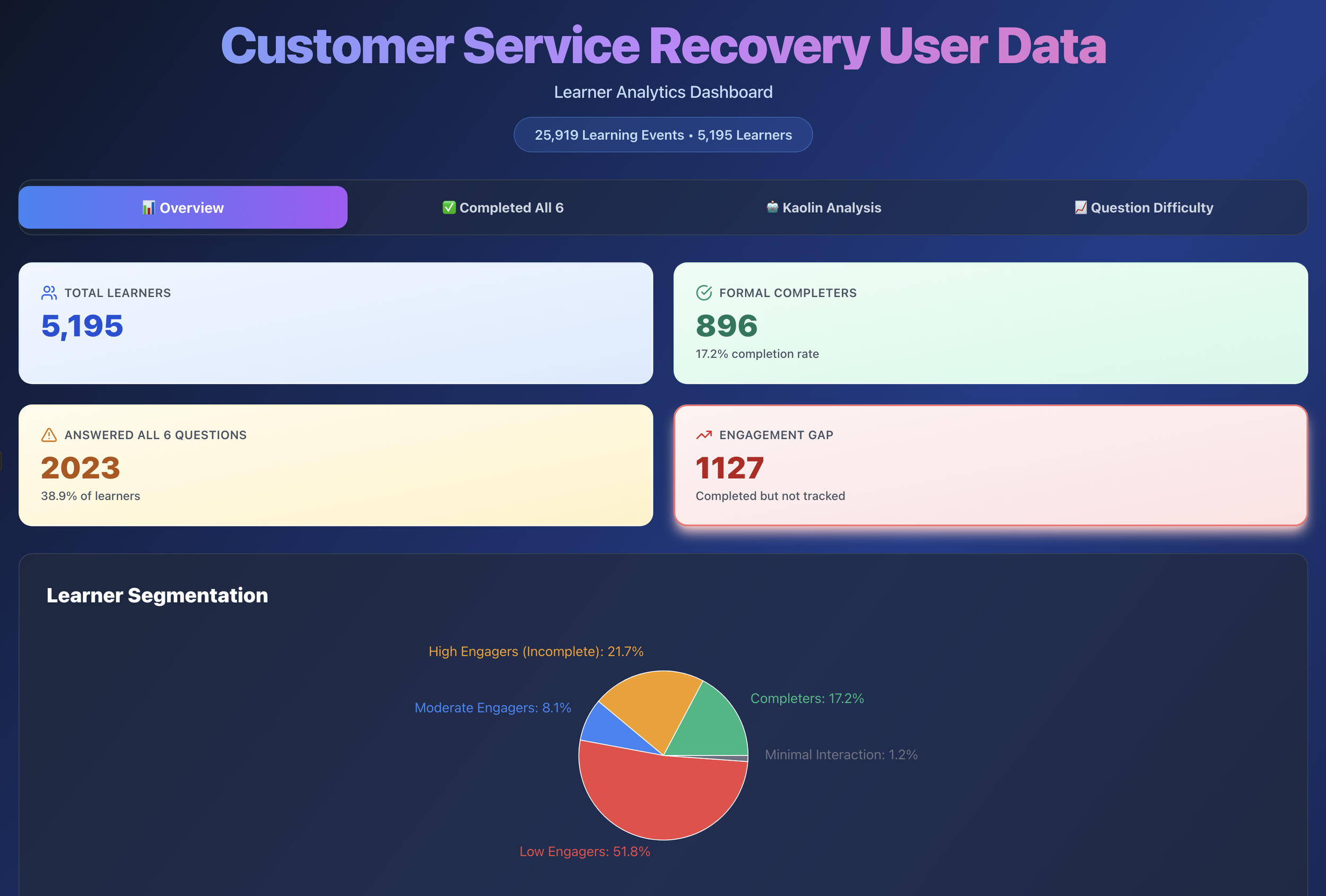

Insights: 2,023 of 5,195 visitors (39%) completed all six questions, proving the value of scenario-based learning even in a portfolio context

Problem and Solution

I'd been collecting xAPI data from my Customer Service Recovery project for 4.5 years, but had never analyzed it. I wanted to understand how visitors experienced the scenario-based learning in a portfolio context and what insights could improve future projects.

I also wanted to explore whether AI could help me interpret learning analytics more effectively than traditional dashboard tools.

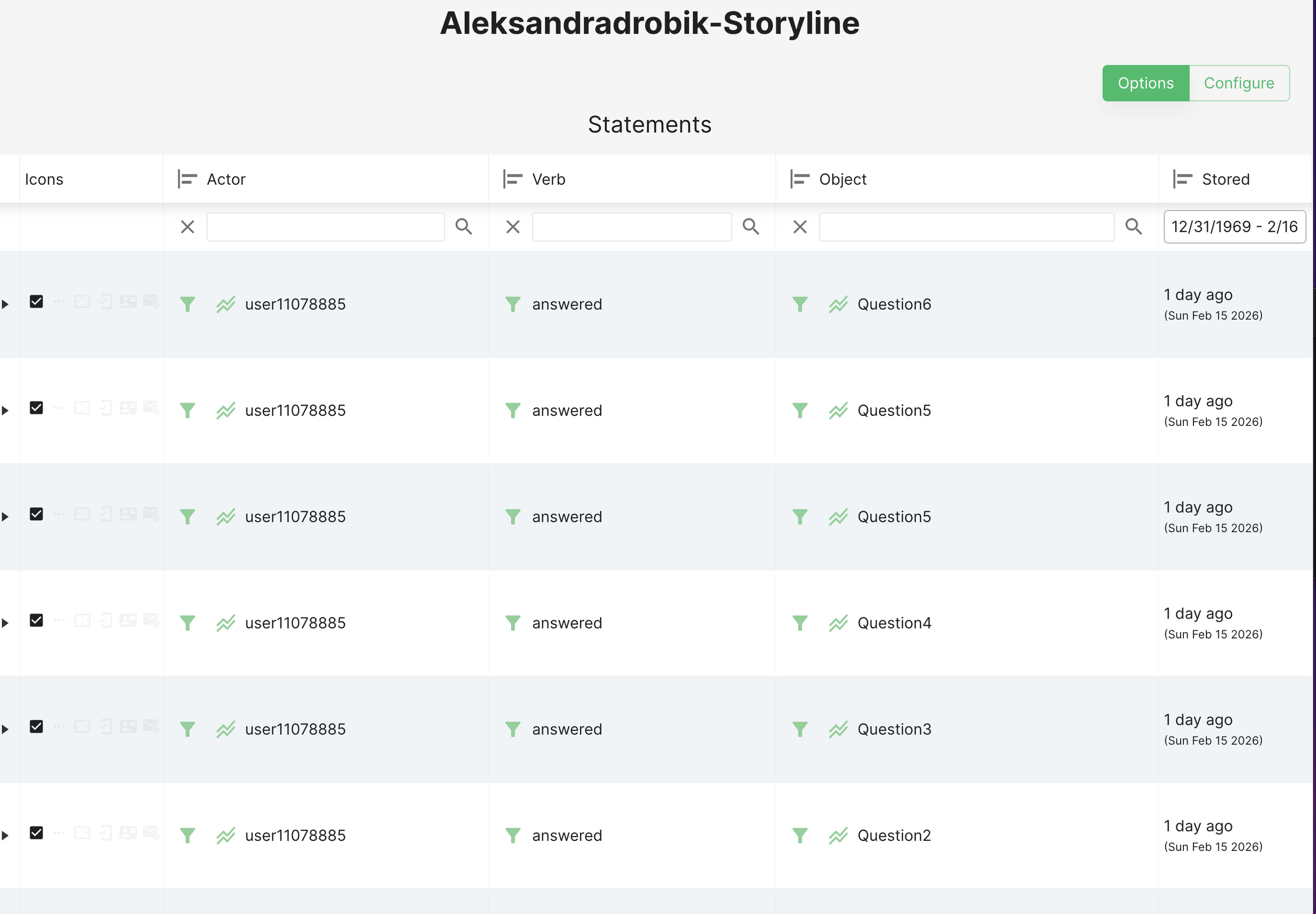

I used Claude AI to analyze the xAPI data through conversational prompting, comparing results against what Veracity LRS built in dashboard would show. The experiment revealed something unexpected: context determines meaning.

The First Analysis: When AI Assumes the Wrong Context

My initial prompts asked Claude to analyze completion rates and engagement patterns. The AI generated a dashboard showing what looked like a learning crisis:

Claude's interpretation:

Severe engagement drop-off after Question 1

Kaolin (the mentor feature) had a negative impact on completion

Only 896 of 5,195 learners (17%) completed with certificates **If this were a real training program, these results would be alarming.

But it wasn't. This was a portfolio demonstration piece, not mandatory employee training. The problem? I hadn't given Claude the right context.

The Second Analysis: Context Changes Everything

I started a fresh conversation with Claude, this time providing context:

My prompt: Can you take this data set and provide insights on how users experienced this learning asset as a portfolio piece within this portfolio page: https://www.aleksdrobik.com/portfolio/project-customer-service-recovery? What I am looking for is to understand how visitors explored my project and what insights I could take and use for future projects. It provided a nice, neat report I could download and review, an analytic visualization image, and another image of additional data.

I wanted to dive a bit deeper, and I asked a follow-up prompt: Based on these recommendations, would you say that in a portfolio piece, having just one or two questions for someone to experience and test out would keep engagement on the page longer?

Then one more prompt: Would you be able to come up with a dashboard that could show optimization and where I could improve to be geared for a future iteration?

Claude's new interpretation:

39% completion rate is strong for optional portfolio exploration

Visitors who engaged with the mentor feature showed genuine interest

Drop-off after Question 1 is expected (visitors sampled the experience)

The same data, different story. With proper context, the analytics revealed optimization opportunities rather than failures.

So what does that look like?

Key Insights from the Exploration

What the data revealed:

5,195 users visited the experience over 4.5 years

2,023 users (39%) attempted all six questions

896 users (17%) completed with certificate download

Most visitors sampled 1-2 questions, then moved on (portfolio browsing behavior)

What I learned about AI-assisted analytics:

Context is everything. The same dataset told two completely different stories depending on how I framed the analysis.

Conversational prompting reveals nuance. Unlike static dashboards, I could ask follow-up questions and refine the analysis iteratively.

AI assumes purpose. Without context, Claude assumed this was employee training and flagged engagement issues. With context, it recognized portfolio browsing patterns.

Kaolin Feature recommendation. Is actually similarly programmed within the experience than perceived by Claude.

Optimization recommendations:

For portfolio pieces, consider 1-2 question samples rather than full six-question scenarios

Front-load the most interesting decision points to hook visitors quickly

Add a "skip to results" option for time-conscious portfolio reviewers

Reflection: This exploration taught me that data without context is just numbers.

The xAPI data I'd been collecting for years contained valuable insights, but only when I asked the right questions with the right framing. Claude didn't just generate dashboards; it helped me think critically about what the data meant in different contexts. As learning experience designers, we often focus on collecting data but not enough on the interpretive layer. This project reminded me that analytics tools are only as good as the questions we ask and the context we provide.

An important note when using data or pulling data from multiple sources is to ensure that it adheres to specific structure and governance and that it’s “Clean”. In this, I only used one source and checked my CSV extract.

This exploration proved that context determines meaning. The same 5,195 visitors and 2,023 completions meant "training crisis" in one analysis and "strong portfolio engagement" in another.

Now I'm taking it a step further: I'm using Claude AI to expand the original Customer Service Recovery experience with agentic personas that anyone can test. Different customer personalities, different emotional states, different sentiments. It's an experiment in how AI can make scenario-based learning more dynamic and personalized.

Will I eventually cut down the original or the AI MVP Exploration version? Probably. But that's part of the learning process.